Appendix: Weak supervision

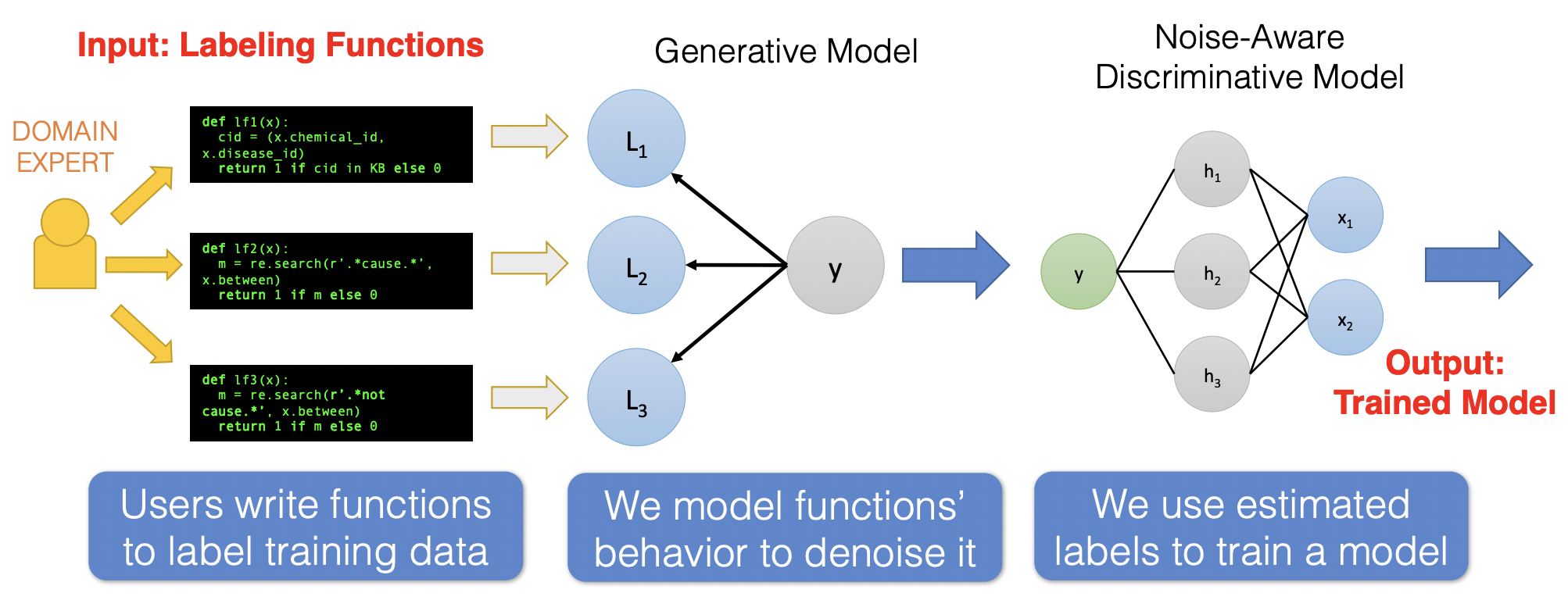

In this section, we apply what we learned in this chapter to implement weak supervision. The idea of weak supervision is that for each data point there is a latent true label that we do not have access to (even during training), instead we can utilize weak signals from user-defined labeling functions (LFs).

LFs can be thought of as heuristic rules that can be applied to a large subset of the data. In case the LF is not applicable, then the function simply abstains from making a prediction. Note that this is a realistic scenario, it’s easier to describe rules than to manually annotate a large number of data. LFs provides noisy, less expensive labels, which can be useful in training noise-aware discriminative models.

Fig. 17 Pipeline for training a model based on weakly supervised labels. The third image is flipped (i.e. the flow of prediction is right to left.) These two tasks will be implemented in this notebook. Source

Remark. The material here is an exposition and implementation of the data programming approach to weak supervision using LFs described in [RSW+17].

Toy example

Our task is to classify whether a sentence is a question or a quote. We will use true labels to evaluate the train and validation performance of the model. But this will not be used during training. In practice, we have a small labeled dataset for validation, that is a part of a large unlabeled dataset, which we want to somehow use for training. The toy dataset consists of 88 sentences:

data = [

"What would you name your boat if you had one? ",

"What's the closest thing to real magic? ",

"Who is the messiest person you know? ",

"What will finally break the internet? ",

"What's the most useless talent you have? ",

"What would be on the gag reel of your life? ",

...

]

Show code cell source

data = [

"What would you name your boat if you had one? ",

"What's the closest thing to real magic? ",

"Who is the messiest person you know? ",

"What will finally break the internet? ",

"What's the most useless talent you have? ",

"What would be on the gag reel of your life? ",

"Where is the worst smelling place you've been?",

"What Secret Do You Have That No One Else Knows Except Your Sibling/S?"

"What Did You Think Was Cool Then, When You Were Young But Isn’t Cool Now?"

"When Was The Last Time You Did Something And Regret Doing It?"

"What Guilty Pleasure Makes You Feel Alive?"

"Any fool can write code that a computer can understand. Good programmers write code that humans can understand.",

"First, solve the problem. Then, write the code.",

"Experience is the name everyone gives to their mistakes.",

" In order to be irreplaceable, one must always be different",

"Java is to JavaScript what car is to Carpet.",

"Knowledge is power.",

"Sometimes it pays to stay in bed on Monday, rather than spending the rest of the week debugging Monday’s code.",

"Perfection is achieved not when there is nothing more to add, but rather when there is nothing more to take away.",

"Ruby is rubbish! PHP is phpantastic!",

" Code is like humor. When you have to explain it, it’s bad.",

"Fix the cause, not the symptom.",

"Optimism is an occupational hazard of programming: feedback is the treatment. " ,

"When to use iterative development? You should use iterative development only on projects that you want to succeed.",

"Simplicity is the soul of efficiency.",

"Before software can be reusable it first has to be usable.",

"Make it work, make it right, make it fast.",

"Programmer: A machine that turns coffee into code.",

"Computers are fast; programmers keep it slow.",

"When I wrote this code, only God and I understood what I did. Now only God knows.",

"A son asked his father (a programmer) why the sun rises in the east, and sets in the west. His response? It works, don’t touch!",

"How many programmers does it take to change a light bulb? None, that’s a hardware problem.",

"Programming is like sex: One mistake and you have to support it for the rest of your life.",

"Programming can be fun, and so can cryptography; however, they should not be combined.",

"Programming today is a race between software engineers striving to build bigger and better idiot-proof programs, and the Universe trying to produce bigger and better idiots. So far, the Universe is winning.",

"Copy-and-Paste was programmed by programmers for programmers actually.",

"Always code as if the person who ends up maintaining your code will be a violent psychopath who knows where you live.",

"Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.",

"Algorithm: Word used by programmers when they don’t want to explain what they did.",

"Software and cathedrals are much the same — first we build them, then we pray.",

"There are two ways to write error-free programs; only the third works.",

"If debugging is the process of removing bugs, then programming must be the process of putting them in.",

"99 little bugs in the code. 99 little bugs in the code. Take one down, patch it around. 127 little bugs in the code …",

"Remember that there is no code faster than no code.",

"One man’s crappy software is another man’s full-time job.",

"No code has zero defects.",

"A good programmer is someone who always looks both ways before crossing a one-way street.",

"Deleted code is debugged code.",

"Don’t worry if it doesn’t work right. If everything did, you’d be out of a job.",

"It’s not a bug — it’s an undocumented feature.",

"It works on my machine.",

"It compiles; ship it.",

"There is no Ctrl-Z in life.",

"Whitespace is never white.",

"What’s your favorite way to spend a day off?",

"What type of music are you into?",

"What was the best vacation you ever took and why?",

"Where’s the next place on your travel bucket list and why?",

"What are your hobbies, and how did you get into them?",

"What was your favorite age growing up?",

"Was the last thing you read?",

"Would you say you’re more of an extrovert or an introvert?",

"What's your favorite ice cream topping?",

"What was the last TV show you binge-watched?",

"Are you into podcasts or do you only listen to music?",

"Do you have a favorite holiday? Why or why not?",

"If you could only eat one food for the rest of your life, what would it be?",

"Do you like going to the movies or prefer watching at home?",

"What’s your favorite sleeping position?",

"What’s your go-to guilty pleasure?",

"In the summer, would you rather go to the beach or go camping?",

"What’s your favorite quote from a TV show/movie/book?",

"How old were you when you had your first celebrity crush, and who was it?",

"What's one thing that can instantly make your day better?",

"Do you have any pet peeves?",

"What’s your favorite thing about your current job?",

"What annoys you most?",

"What’s the career highlight you’re most proud of?",

"Do you think you’ll stay in your current gig awhile? Why or why not?",

"What type of role do you want to take on after this one?",

"Are you more of a work to live or a live to work type of person?",

"Does your job make you feel happy and fulfilled? Why or why not?",

"How would your 10-year-old self react to what you do now?",

"What do you remember most about your first job?",

"How old were you when you started working?",

"What’s the worst job you’ve ever had?",

"What originally got you interested in your current field of work?",

"Have you ever had a side hustle or considered having one?",

"What’s your favorite part of the workday?",

"What’s the best career decision you’ve ever made?",

"What’s the worst career decision you’ve ever made?",

"Do you consider yourself good at networking?"

]

Then, we can define labeling functions. Notice that these are user-defined and requires some domain expertise to be effective:

QUESTION = 1

QUOTE = -1

ABSTAIN = 0

def lf_keyword_lookup(x):

keywords = ["why", "what", "when", "who", "where", "how"]

return QUESTION if any(word in x.lower() for word in keywords) else ABSTAIN

def lf_char_length(x):

return QUOTE if len(x) > 100 else ABSTAIN

def lf_regex_endswith_dot(x):

return QUOTE if x.endswith(".") else ABSTAIN

Coverage and accuracy

Applying this to our dataset we get the \(\Lambda_{ij}\) matrix. We will also assign the true label:

import pandas as pd

df = pd.DataFrame({"sentences": data})

df["LF1"] = df.sentences.map(lf_keyword_lookup)

df["LF2"] = df.sentences.map(lf_char_length)

df["LF3"] = df.sentences.map(lf_regex_endswith_dot)

df["y"] = df.sentences.map(lambda x: 1 if x.strip().endswith("?") else -1)

df.head()

| sentences | LF1 | LF2 | LF3 | y | |

|---|---|---|---|---|---|

| 0 | What would you name your boat if you had one? | 1 | 0 | 0 | 1 |

| 1 | What's the closest thing to real magic? | 1 | 0 | 0 | 1 |

| 2 | Who is the messiest person you know? | 1 | 0 | 0 | 1 |

| 3 | What will finally break the internet? | 1 | 0 | 0 | 1 |

| 4 | What's the most useless talent you have? | 1 | 0 | 0 | 1 |

Note that LF2 is intuitively not a good LF function. Indeed, we can look at the strength of the signals of each LF by comparing it with the true label. A labeling function is characterized by its coverage and accuracy (i.e. given it did not abstain):

# Note: the ff. shows *latent* LF parameters

print(f"coverage: {(df.LF1 != 0).mean():.3f} acc: {(df[df.LF1 != 0].LF1 == df[df.LF1 != 0].y).mean():.3f}")

print(f"coverage: {(df.LF2 != 0).mean():.3f} acc: {(df[df.LF2 != 0].LF2 == df[df.LF2 != 0].y).mean():.3f}")

print(f"coverage: {(df.LF3 != 0).mean():.3f} acc: {(df[df.LF3 != 0].LF3 == df[df.LF3 != 0].y).mean():.3f}")

coverage: 0.545 acc: 0.750

coverage: 0.114 acc: 1.000

coverage: 0.432 acc: 1.000

Remark. Note that we generally do not have access to true labels for a representative sample of our dataset. Hence, even estimating the parameters of our LFs are not possible. The following sections will deal with algorithms for estimating these parameters as well as training a machine learning model with noisy labels.